Here at DataBlick we're known for doing amazing things with Tableau and teaching others. Our Padawan Dojo series is for new users (and users who help others) to learn how to do your own great things in Tableau. This lesson is about: learning an important mental model for working with Tableau, understanding when and how to do the equivalent of an Excel SUMIF() in Tableau, and finally how to validate the results.

The other day I (Jonathan) was helping a new Tableau user to take a data source of sales opportunities that looked something like this:

...to a view where there are rows for the Expected Revenue of the full pipeline, all opportunities with >25% confidence level, all opportunities with >50% confidence level, and all with >75% confidence level, like this:

This is a really good example of switching from spreadsheet-based thinking to Tableau-thinking when we start using Tableau. In a spreadsheet like Excel we can have a set of data and arbitrarily sum up cells in that data via whatever cell references we want. So once a row of data has been identified as belonging to a condition such as being at a certain confidence level, for example by using a formula like =SUMIF(Data!B:B,">25",Data!C:C) function then we can create a few of more SUMIF() functions and have our results:

Tableau doesn't do arbitrary cell references; instead Tableau "thinks" like a database, and that requires a different mental model. Read on for how to think differently for Tableau.

Right Direction, Wrong Calculation

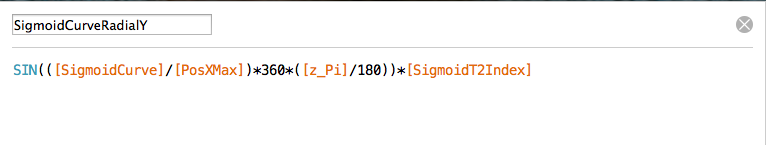

A really common notion for spreadsheet users when they first start building a calculation like this in Tableau is to think that since there needs to be a set of values (the three confidence levels and full pipeline) that don't exist in the data and we want four marks (numbers) then that must mean that we need to build a dimension to create the four buckets. They will write a formula like this Pipeline calc, with the idea to assign the different confidence levels to different buckets:

IF [Confidence %] > -1 THEN

'Full Pipeline'

ELSEIF [Confidence %] > 25 THEN

'25%+ Confidence'

ELSEIF [Confidence %] > 50 THEN

'50%+ Confidence'

ELSEIF [Confidence %] > 75 THEN

'75%+ Confidence'

END

Then when they bring a calc like this into a view it fails, it only returns a single bucket:

The reason this calc fails is a combination of a couple of factors:

- When we build a calculation in Tableau we are effectively adding a new column to the data where each record will have one and only one result value of that calculation.

- Since this calculation uses an IF/THEN statement Tableau starts at beginning of the formula and will stop once it reaches the first TRUE condition, if there aren't any TRUE conditions then it will use the ELSE, and finally if there are no TRUE conditions and no ELSE then it will return Null.

For example if a record has a 75% Confidence then the calculation is going to only return 'Full Pipeline' for that record and not count the record in any of the other buckets.

Knowing that there needs to be a way to get four numbers as a result is of course the goal, and the idea of doing four evaluations is correct, but stuffing them all into one calculated field is incorrect because a calculated field can only return a single result for each record.

Let's give another example of how this works. For example if we just want to categorize the Confidence into Low/Medium/High and then count the records (or sum the Expected Value) for each bucket then we could use a formula like this:

IF [Confidence %] < 33 THEN

'Low'

ELSEIF [Confidence %] < 67 THEN

'Medium'

ELSE

'High'

END

Again, this only returns a single result per record, and then we could create a view that would show the number of records in each bucket, but still each record is only counted once.

Four Calculations

What we really need to do is count the same record *multiple times*, one for each bucket. If there's anything you can take away from this post, it's this bit of awareness of. By default Tableau is not going to double-count (or quadruple-count) our records of data, to do so would make for very inaccurate views. So we have to set up Tableau so that it will consider each record for each of the four buckets. In fact, that's exactly what we did in the the original spreadsheet with four SUMIFs, each one went through the entire data set. It's the translation of that SUMIF into Tableau where we ran into a problem. So to help with that translation let's take a SUMIF() calculation apart. It's really doing two steps:

- There's an IF statement that is computed for each record in the data source, for example for the >25% Confidence field it only returns the Expected Revenue when the value of the Confidence cell is >25%.

- The results of that whole set of results are summed up (aggregated) across the whole data set.

Let's demonstrate this in Excel, then in Tableau. In Excel we can take the data and add four new columns with formulas like =IF(B2>25,C2,0) and fill those down:

Then at the bottom add the totals with a formula like =SUM(D2:D1319):

In Tableau we can do the same, i.e. do the IF statement and then SUM it, however we only need to write four calculated fields, we don't need to do any fill down operations because Tableau will apply the calculation to each result and we don't need to do any sum because Tableau can do it for us.

Here's the Full Pipeline calc:

IF [Confidence %] > -1 THEN

[Expected Revenue]

END

What this does is return the Expected Revenue for each record when the Confidence for that record is greater than -1, and if not then it returns Null. (ou can think of this IF statment has having an invisible "ELSE Null" just before the END, like this:

IF [Confidence %] > -1 THEN

[Expected Revenue]

ELSE

Null

END

Here's the >25% Confidence formula:

IF [Confidence %] > 25 THEN

[Expected Revenue]

END

The >50% and >75% Confidence formulas are similar. The next step in building out calculations like this is to validate that they are working as desired, and there are a variety of ways we can do this in Tableau, we'll show you two here.

View Data

If your data set doesn't have too many columns then the fastest can be to use the View Data button on the Dimensions window:

Going from left to right there are columns for all the dimensions and measures, with the columns sorted alphabetically. You can drag columns to be closer to one another for eaiser comparison. Here we can quickly see for each record in the data source that the calculations are returning desired results.

Going from left to right there are columns for all the dimensions and measures, with the columns sorted alphabetically. You can drag columns to be closer to one another for eaiser comparison. Here we can quickly see for each record in the data source that the calculations are returning desired results.

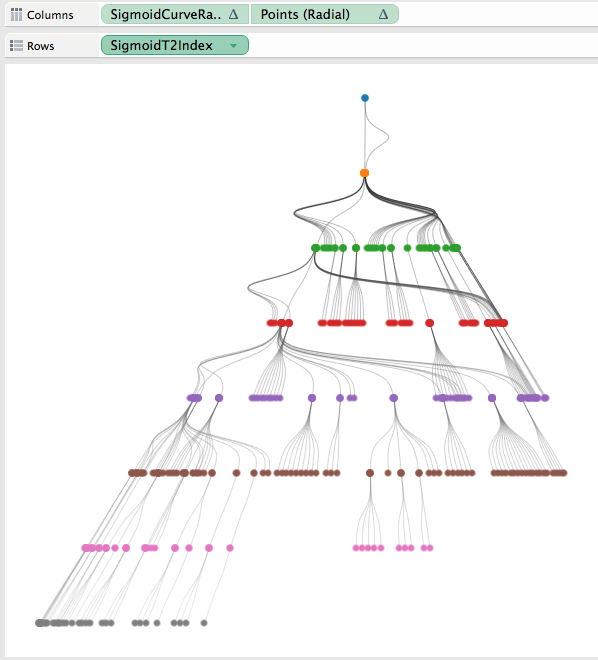

Build a Viz with Record Level of Detail

An alternative to using the View Data window is to build a worksheet that is at the record level of detail. For that we need to know the dimension(s) that create a unique key to identify each record, in this case it's the Opportunity ID. Here's a way to test that:

1) Drag the Number of Records measure to Color. Tableau automatically applies the SUM() aggregation and returns the number of records for the entire data source, and that is what is on the Color Legend - 1,318 records in this case:

2) Drag the Opportunity ID dimension to Rows. Tableau now computes the SUM(Number of Records) for each distinct Opportunity ID.

There are 1,318 distinct Opportunity IDs in the data (indicated by the 1,318 rows message in the status bar) so SUM(Number of Records) returns 1 for each, and that is what we are looking for in that color legend. If there was a range of values in the color legend then we'd know SUM(Number of Records) was returning more than 1 value and we'd know that Opportunity ISD wasn't a unique key.

3) Now that we know Opportunity ID really is the unique key we can drag SUM(Number of Records) off the view and know that whatever else we display here will be at the record level even though it's still got the SUM() aggregation around it.

4) The next step is to select all the measure(s) then click and drag to drop them on the Abc marks:

Tableau automatically builds out the text table showing all the values and we can validate from here. Once the validation is complete building the final view only takes a few more clicks, just remove Opportunity ID and the unneeded measures from the view:

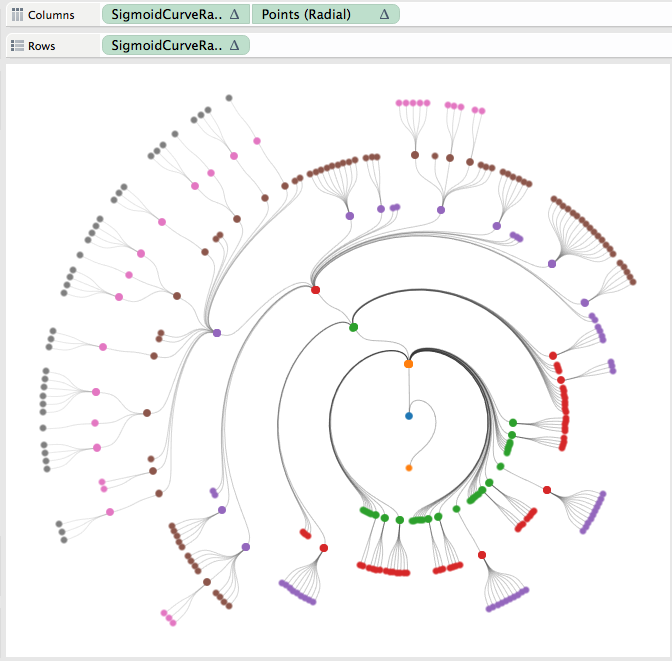

Measure Names is the (Lesser) Dimension We Wanted

Note that the view above that we built using Tableau's automatic Show Me behavior has Measure Names on Columns and Measure Values on the Text Shelf, with the list of measures in the Measure Values card. Above in the "Right Direction, Wrong Calculation" section I described how we can start out believing that we need a dimension to slice the data into the four buckets. That intuition isn't too far off because we really do need a discrete (blue) pill to generate the headers and that's exactly what Measure Names gets us. This feature of Tableau "pivots" the data to take a set of columns (the four measures in Measure Values) and creates the Measure Names lesser dimension. I write "lesser" here because it can't be used in calculated fields, can't be the target of a Filter Action, etc., however for our present purposes it's perfect.

Conclusion

We walked through a calculation in Excel using SUMIF() and explained how to create and validate the same results in Tableau, covering a few other bits of functionality along way. To help you follow along here's the SUMIF Example workbook on Tableau Public and the original Excel file.

If you think this was useful to you and want more of this learning in your organization please contact us at info@datablick.com! We can provide short one-on-one virtual sessions, multiple-day onsite trainings, and more. Also we tweet a Tableau tip of the day @helpmedatablick.

Going from left to right there are columns for all the dimensions and measures, with the columns sorted alphabetically. You can drag columns to be closer to one another for eaiser comparison. Here we can quickly see for each record in the data source that the calculations are returning desired results.

Going from left to right there are columns for all the dimensions and measures, with the columns sorted alphabetically. You can drag columns to be closer to one another for eaiser comparison. Here we can quickly see for each record in the data source that the calculations are returning desired results.